UnifiedClassification

- class hana_ml.algorithms.pal.unified_classification.UnifiedClassification(func, multi_class=None, massive=False, group_params=None, pivoted=False, **kwargs)

The Python wrapper for SAP HANA PAL unified-classification function.

Compared with the original classification interfaces, new features supported are listed below:

Classification algorithms easily switch

Dataset automatic partition

Model evaluation procedure provided

More metrics supported

- Parameters:

- funcstr

The name of a specified classification algorithm. The following algorithms are supported:

'DecisionTree'

'HybridGradientBoostingTree'

'LogisticRegression'

'MLP'

'NaiveBayes'

'RandomDecisionTree'

'SVM'

Note

'LogisticRegression' contains both binary-class logistic-regression as well as multi-class logistic-regression functionalities. By default the functionality is assumed to be binary-class. If you want to shift to multi-class logistic-regression, please set

functo be 'LogisticRegression' and specifymulti-class= True.- multi_classbool, optional

Specifies whether or not to use multiclass-logisticregression.

Only valid when

funcis 'LogisticRegression'. Defaults to None.- massivebool, optional

Specifies whether or not to use massive mode.

True : massive mode.

False : single mode.

For parameter setting in massive mode, you could use both group_params (please see the example below) or the original parameters. Using original parameters will apply for all groups. However, if you define some parameters of a group, the value of all original parameter setting will be not applicable to such group.

An example is as follows:

In the first line of code, as 'solver' is set in group_params for Group_1, parameter setting of 'max_iter' is not applicable to Group_1.

Defaults to False.

- group_paramsdict, optional

If massive mode is activated (

massiveis True), input data for classification shall be divided into different groups with different classification parameters applied. This parameter specifies the parameter values of the chosen classification algorithmfuncw.r.t. different groups in a dict format, where keys corresponding togroup_keywhile values should be a dict for classification algorithm parameter value assignments.An example is as follows:

Valid only when

massiveis True and defaults to None.- pivotedbool, optional

If True, it will enable PAL unified classification function for pivoted data. In this case, meta data must be provided in the fit function.

Defaults to False.

- **kwargskeyword arguments

Arbitrary keyword arguments and please referred to the responding algorithm for the parameters' key-value pair.

Note that some parameters are disabled in the classification algorithm!

'DecisionTree' :

DecisionTreeClassifierDisabled parameters: output_rules, output_confusion_matrix.

Parameters removed from initialization but can be specified in fit(): categorical_variable, bins, priors.

'HybridGradientBoostingTree' :

HybridGradientBoostingClassifierDisabled parameters: calculate_importance, calculate_cm.

Parameters removed from initialization but can be specified in fit(): categorical_variable.

'LogisticRegression'

LogisticRegressionDisabled parameters : pmml_export.

Parameters removed from initialization but can be specified in fit(): categorical_variable, class_map0, class_map1.

Parameters with changed meaning :

json_export, where False value now means 'Exports multi-class logistic regression model in PMML'.

'MLP' :

MLPClassifierDisabled parameters: functionality.

Parameters removed from initialization but can be specified in fit(): categorical_variable.

'NaiveBayes' :

NaiveBayesParameters removed from initialization but can be specified in fit(): categorical_variable.

'RandomDecisionTree' :

RDTClassifierDisabled parameters: calculate_oob.

Parameters removed from initialization but can be specified in fit(): categorical_variable, strata, priors.

'SVM' :

SVCParameters removed from initialization but can be specified in fit(): categorical_variable.

For more parameter mappings of hana_ml and HANA PAL, please refer to the doc page: Parameter Mappings

An example for decision tree algorithm is shown below:

You could create a dictionary to pass the arguments:

or use the following line instead as a whole:

Examples

Case 1: Assume the training DataFrame is df_fit, data for prediction is df_predict and for score is df_score.

Train the model:

>>> rdt_params = dict(random_state=2, split_threshold=1e-7, min_samples_leaf=1, n_estimators=10, max_depth=55)

>>> uc_rdt = UnifiedClassification(func = 'RandomDecisionTree', **rdt_params)

>>> uc_rdt.fit(data=df_fit, partition_method='stratified', stratified_column='CLASS', partition_random_state=2, training_percent=0.7, ntiles=2)

Output:

>>> uc_rdt.importance_.collect().set_index('VARIABLE_NAME') VARIABLE_NAME IMPORTANCE 0 OUTLOOK 0.203566 1 TEMP 0.479270 2 HUMIDITY 0.317164 3 WINDY 0.000000

Prediction:

>>> res = uc_rdt.predict(data=df_predict, key = "ID")[['ID', 'SCORE', 'CONFIDENCE']].collect() ID SCORE CONFIDENCE 0 0 Play 1.0 1 1 Play 0.8 2 2 Play 0.7 3 3 Play 0.9 4 4 Play 0.8 5 5 Play 0.8 6 6 Play 0.9

Score:

>>> score_res = uc_rdt.score(data=df_score, key='ID', max_result_num=2, ntiles=2)[1] >>> score_res.head(4).collect() STAT_NAME STAT_VALUE CLASS_NAME 0 AUC 0.673469387755102 None 1 RECALL 0 Do not Play 2 PRECISION 0 Do not Play 3 F1_SCORE 0 Do not Play

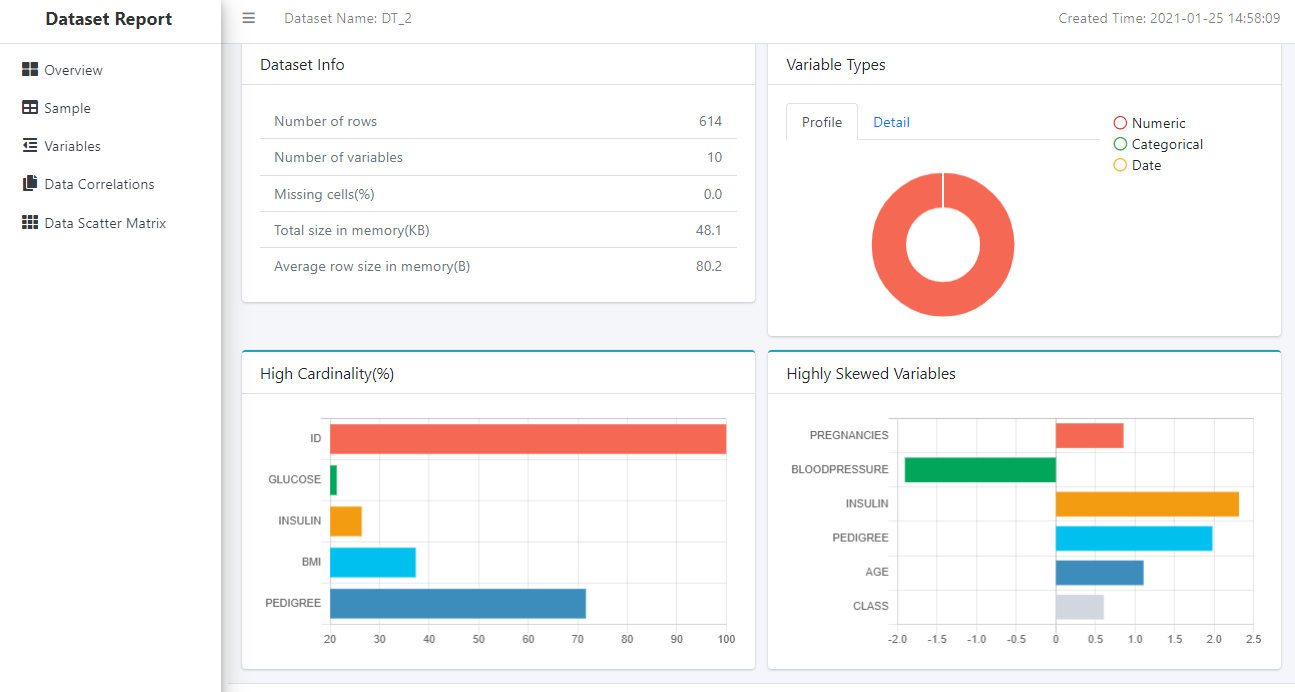

Case 2: UnifiedReport for UnifiedClassification is shown as follows:

>>> from hana_ml.algorithms.pal.model_selection import GridSearchCV >>> hgc = UnifiedClassification('HybridGradientBoostingTree') >>> gscv = GridSearchCV(estimator=hgc, param_grid={'learning_rate': [0.1, 0.4, 0.7, 1], 'n_estimators': [4, 6, 8, 10], 'split_threshold': [0.1, 0.4, 0.7, 1]}, train_control=dict(fold_num=5, resampling_method='cv', random_state=1, ref_metric=['auc']), scoring='error_rate') >>> gscv.fit(data=df_train, key= 'ID', label='CLASS', partition_method='stratified', partition_random_state=1, stratified_column='CLASS', build_report=True)

To look at the dataset report:

>>> UnifiedReport(df_train).build().display()

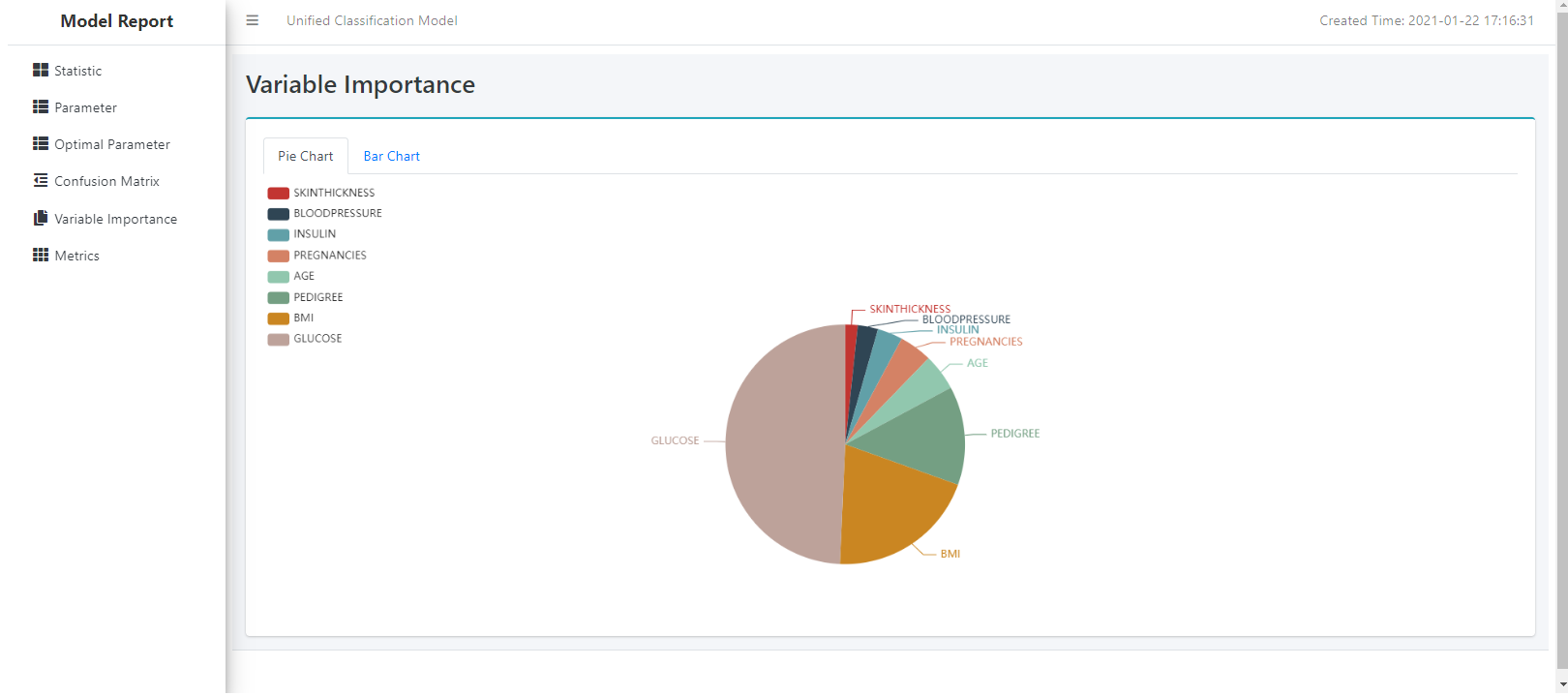

To see the model report:

>>> UnifiedReport(gscv.estimator).display()

To see the Optimal Parameter page:

Case 3: Local interpretability of models - tree SHAP

>>> uhgc = UnifiedClassification(func='HybridGradientBoostingTree')# HGBT model >>> uhgc.fit(data=df_train) # do not need any background data for tree models >>> res = uhgc.predict(data=df_predict, ... ..., ... attribution_method='tree-shap',# specify the attribution method to activate local interpretability ... top_k_attributions=5)

Case 4: Local interpretability of models - kernel SHAP

>>> unb = UnifiedClassification(func='NaiveBayes')# Naive Bayes model >>> unb.fit(data=df_train, ... background_size=10,# specify non-zero background data size to activate local intepretability ... background_random_state=2022) >>> res = unb.predict(data=df_predict, ... ..., ... top_k_attributions=4, ... sample_size=0, ... random_state=2022)

- Attributes:

- model_list of DataFrames.

Model content.

- importance_DataFrame

The feature importance (the higher, the more important the feature).

- statistics_DataFrame

Names and values of statistics.

- optimal_param_DataFrame

Provides optimal parameters selected.

Available only when parameter selection is triggered.

- confusion_matrix_DataFrame

Confusion matrix used to evaluate the performance of classification algorithms.

- metrics_DataFrame

Value of metrics.

- partition_DataFrame

Type of partition.

- error_msg_DataFrame

Error message. Only valid if

massiveis True when initializing an 'UnifiedClassification' instance.

Methods

abap_class_mapping(value)Mapping the abap class.

add_amdp_item(template_key, value)Add item.

add_amdp_name(amdp_name)Add AMDP name.

add_amdp_template(template_name)Add AMDP template

After add_item, generate amdp file from template.

Build the model report.

create_amdp_class(amdp_name[, ...])Create AMDP class file.

create_model_state([model, function, ...])Create PAL model state.

delete_model_state([state])Delete PAL model state.

It will disable mlflow autologging.

enable_mlflow_autologging([schema, meta, ...])It will enable mlflow autologging.

fit(data[, key, features, label, group_key, ...])Fit function for unified classification.

generate_html_report(filename)Save model report as a html file.

Render model report as a notebook iframe.

Get AMDP not fillin keys.

Return the confusion matrix.

Return the feature importance.

Return the optimal parameters.

Return the performance metrics.

Load ABAP class mapping.

load_amdp_template(template_name)Load AMDP template

predict(data[, key, features, group_key, ...])Predict with a classification model.

score(data[, key, features, label, ...])Users can use the score function to evaluate the model quality.

set_framework_version(framework_version)Switch v1/v2 version of report.

set_metric_samplings([roc_sampling, ...])Set metric samplings to report builder.

set_model_state(state)Set the model state by state information.

Use the reason code generated during the prediction phase to build a ShapleyExplainer instance.

set_shapley_explainer_of_score_phase(...[, ...])Use the reason code generated during the scoring phase to build a ShapleyExplainer instance.

update_cv_params(name, value, typ)Update parameters for model-evaluation/parameter-selection.

write_amdp_file([filepath, version, outdir])Write template to file.

- disable_mlflow_autologging()

It will disable mlflow autologging.

- enable_mlflow_autologging(schema=None, meta=None, is_exported=False)

It will enable mlflow autologging.

- Parameters:

- schemastr, optional

Define the model storage schema for mlflow autologging.

Defaults to the current schema.

- metastr, optional

Define the model storage meta table for mlflow autologging.

Defaults to 'HANAML_MLFLOW_MODEL_STORAGE'.

- is_exportedbool, optional

Determine whether export the HANA model to mlflow.

Defaults to False.

- update_cv_params(name, value, typ)

Update parameters for model-evaluation/parameter-selection.

- fit(data, key=None, features=None, label=None, group_key=None, group_params=None, purpose=None, partition_method=None, stratified_column=None, partition_random_state=None, training_percent=None, training_size=None, ntiles=None, categorical_variable=None, output_partition_result=None, background_size=None, background_random_state=None, build_report=False, impute=False, strategy=None, strategy_by_col=None, als_factors=None, als_lambda=None, als_maxit=None, als_randomstate=None, als_exit_threshold=None, als_exit_interval=None, als_linsolver=None, als_cg_maxit=None, als_centering=None, als_scaling=None, meta_data=None, permutation_importance=None, permutation_evaluation_metric=None, permutation_n_repeats=None, permutation_seed=None, permutation_n_samples=None, **kwargs)

Fit function for unified classification.

- Parameters:

- dataDataFrame

DataFrame that contains the training data.

If the corresponding UnifiedClassification instance is for pivoted input data(i.e. setting

pivoted= True in initialization), thendatamust be pivoted such that:in massive mode,

datamust be structured as follows:1st column: Group ID, type INTEGER, VARCHAR or NVARCHAR

2nd column: Record ID, type INTEGER, VARCHAR or NVARCHAR

3rd column: Variable Name, type VARCHAR or NVARCHAR

4th column: Variable Value, type VARCHAR or NVARCHAR

5th column: Self-defined Data Partition, type INTEGER, 1 for training and 2 for validation.

in non-massive mode,

datamust be structured as follows:1st column: Record ID, type INTEGER, VARCHAR or NVARCHAR

2nd column: Variable Name, type VARCHAR or NVARCHAR

3rd column: Variable Value, type VARCHAR or NVARCHAR

4th column: Self-defined Data Partition, type INTEGER, 1 for training and 2 for validation.

Note

If

datais pivoted, then the following parameters become ineffective:key,features,label,group_keyandpurpose.- keystr, optional

Name of the ID column.

If

keyis not provided, then:if

datais indexed by a single column, thenkeydefaults to that index column;otherwise, it is assumed that

datacontains no ID column.

- featureslist of str, optional

Names of the feature columns.

If

featuresis not provided, it defaults to all non-ID, non-label columns.- labelstr, optional

Name of the dependent variable. If

labelis not provided, it defaults to the last non-ID column.- group_keystr, optional

The column of group_key. Data type can be INT or NVARCHAR/VARCHAR. If data type is INT, only parameters set in the group_params are valid.

This parameter is only valid when

massiveis True in class instance initialization.Defaults to the first column of data if the index columns of data is not provided. Otherwise, defaults to the first column of index columns.

- group_paramsdict, optional

If massive mode is activated (

massiveis set as True in class instance initialization), input data for classification shall be divided into different groups with different classification parameters applied. This parameter specifies the parameter values of the chosen classification algorithmfuncin fit() w.r.t. different groups in a dict format, where keys corresponding togroup_keywhile values should be a dict for classification algorithm parameter value assignments.An example is as follows:

Valid only when

massiveis set as True in class instance initialization.Defaults to None.

- purposestr, optional

Indicates the name of purpose column which is used for user self-defined data partition.

The meaning of value in the column for each data instance is shown below:

1 : training

2 : validation

Valid and mandatory only when

partition_methodis 'predefined'(or equivalently, 'user_defined').No default value.

- partition_method{'no', 'predefined', 'stratified'}, optional

Defines the way to divide the dataset.

'no' : no partition.

'predefined'/'user_defined' : predefined partition.

'stratified' : stratified partition.

Defaults to 'no'.

- stratified_columnstr, optional

Indicates which column is used for stratification.

Valid only when

partition_methodis set to 'stratified'.No default value.

- partition_random_stateint, optional

Indicates the seed used to initialize the random number generator.

Valid only when

partition_methodis set to 'stratified'.0 : Uses the system time.

Not 0 : Uses the specified seed.

Defaults to 0.

- training_percentfloat, optional

The percentage of data used for training. Value range: 0 <= value <= 1.

Defaults to 0.8.

- training_sizeint, optional

Row size of data used for training. Value range >= 0.

If both

training_percentandtraining_sizeare specified,training_percenttakes precedence.No default value.

- ntilesint, optional

Used to control the population tiles in metrics output. The validation value should be at least 1 and no larger than the row size of the validation data. For AUC, this parameter means the maximum tiles.

The value should be at least 1 and no larger than the row size of the input data

If the row size of data for metrics evaluation is less than 20, the default value is 1; otherwise it is 20.

- categorical_variablestr or list of str, optional

Specifies INTEGER column(s) that should be treated as categorical.

Other INTEGER columns will be treated as continuous.

No default value.

- output_partition_resultbool, optional

Specifies whether or not to output the partition result.

Valid only when

partition_methodis not 'no', andkeyis not None.Defaults to False.

- background_sizeint, optional

Specifies the size of background data used for SHapley Additive exPlanations(SHAP) values calculation.

Should not larger than the size of training data.

Valid only for Naive Bayes, Support Vector Machine, or Multilayer Perceptron and Multi-class Logistic Regression models. For such models, users should specify a non-zero value to activate SHAP explanations in model scoring(i.e. predict() or score()) phase.

Defaults to 0(no background data, in which case the calculation of SHAP values shall be disabled).

Note

SHAP is a method for local interpretability of models, please see Local Interpretability of Models for more details.

- background_random_stateint, optional

Specifies the seed for random number generator in the background data sampling.

0 : Uses current time as seed

Others : The specified seed value

Valid only for Naive Bayes, Support Vector Machine, or Multilayer Perceptron and Multi-class Logistic Regression models.

Defaults to 0.

- build_reportbool, optional

Whether to build a model report or not.

Example:

>>> from hana_ml.visualizers.unified_report import UnifiedReport >>> hgc = UnifiedClassification('HybridGradientBoostingTree') >>> hgc.fit(data=diabetes_train, key= 'ID', label='CLASS', partition_method='stratified', partition_random_state=1, stratified_column='CLASS', build_report=True) >>> UnifiedReport(hgc).display()

Defaults to False.

- imputebool, optional

Specifies whether or not to handle missing values in the data for training.

Defaults to False.

- strategy, strategy_by_col, als_*parameters for missing value handling, optional

All these parameters mentioned above are for handling missing values in data, please see Parameters for Missing Value Handling in HANA DataFrame for more details.

All parameters are valid only when

imputeis set as True.- meta_dataDataFrame, optional

Specifies the meta data for pivoted input data. Mandatory if

pivotedis specified as True in initializing the class instance.If provided, then

meta_datashould be structured as follows:1st column: NAME, type VRACHAR or NVARCHAR. The name of the variable.

2nd column: TYPE, VRACHAR or NVARCHAR. The type of the variable, can be CONTINUOUS, CATEGORICAL or TARGET.

- permutation_*parameter for permutation feature importance, optional

All parameters with prefix 'permutation_' are for the calculation of permutation feature importance.

They are valid only when

partition_methodis specified as 'predefined' or 'stratified', since permuation feature importance is calculated on the validation set.Please see Permutation Feature Importance for more details.

- **kwargskeyword arguments

Additional keyword arguments of model fitting for different classification algorithms.

Please referred to the fit function of each algorithm as follows:

'DecisionTree' :

DecisionTreeClassifier'HybridGradientBoostingTree' :

HybridGradientBoostingClassifier'LogisticRegression'

LogisticRegression'MLP' :

MLPClassifier'NaiveBayes' :

NaiveBayes'RandomDecisionTree' :

RDTClassifier'SVM' :

SVC

- Returns:

- A fitted object of 'UnifiedClassification'.

- get_optimal_parameters()

Return the optimal parameters.

- get_confusion_matrix()

Return the confusion matrix.

- get_feature_importances()

Return the feature importance.

- get_performance_metrics()

Return the performance metrics.

- predict(data, key=None, features=None, group_key=None, group_params=None, model=None, thread_ratio=None, verbose=None, class_map1=None, class_map0=None, alpha=None, block_size=None, missing_replacement=None, categorical_variable=None, top_k_attributions=None, attribution_method=None, sample_size=None, random_state=None, impute=False, strategy=None, strategy_by_col=None, als_factors=None, als_lambda=None, als_maxit=None, als_randomstate=None, als_exit_threshold=None, als_exit_interval=None, als_linsolver=None, als_cg_maxit=None, als_centering=None, als_scaling=None, ignore_unknown_category=None, **kwargs)

Predict with a classification model.

- Parameters:

- dataDataFrame

Data to be predicted.

If self.pivoted is True, then

datamust be pivoted, indicating that it should be structured the same as the pivoted data used for training(exclusive of the last data partition column) and contains no target values. In this case, the following parameters become ineffective:key,features,group_key.- keystr, optional

Name of the ID column.

In single mode, mandatory if

datais not indexed, or the index ofdatacontains multiple columns. Defaults to the single index column ofdataif not provided.In massive mode, defaults to the first-non group key column of data if the index columns of data is not provided. Otherwise, defaults to the second of index columns of data and the first column of index columns is group_key.

- featuresa list of str, optional

Names of feature columns in data for prediction.

Defaults all non-key columns in data if not provided.

- group_keystr, optional

The column of group_key. Data type can be INT or NVARCHAR/VARCHAR. If data type is INT, only parameters set in the group_params are valid.

This parameter is only valid when

massiveis set as True in class instance initialization.Defaults to the first column of data if the index columns of data is not provided. Otherwise, defaults to the first column of index columns.

- group_paramsdict, optional

If massive mode is activated (

massiveis set as True in class instance initialization), input data for classification shall be divided into different groups with different classification parameters applied. This parameter specifies the parameter values of the chosen classification algorithmfuncin predict() w.r.t. different groups in a dict format, where keys corresponding togroup_keywhile values should be a dict for classification algorithm parameter value assignments.An example is as follows:

Valid only when

massiveis set as True in class instance initialization.Defaults to None.

- modelDataFrame

Fitted classification model.

Defaults to self.model_.

- thread_ratiofloat, optional

Controls the proportion of available threads to use for prediction.

The value range is from 0 to 1, where 0 indicates a single thread, and 1 indicates up to all available threads.

Values between 0 and 1 will use that percentage of available threads.

Values outside this range tell PAL to heuristically determine the number of threads to use.

Defaults to the PAL's default value.

- verbosebool, optional

Specifies whether to output all classes and the corresponding confidences for each data.

Defaults to False.

- class_map0str, optional

Specifies the label value which will be mapped to 0 in logistic regression.

Valid only for logistic regression models when label variable is of VARCHAR or NVARCHAR type.

No default value.

- class_map1str, optional

Specifies the label value which will be mapped to 1 in logistic regression.

Valid only for logistic regression models when label variable is of VARCHAR or NVARCHAR type.

No default value.

- alphafloat, optional

Specifies the value for laplace smoothing.

0: Disables Laplace smoothing.

Other positive values: Enables Laplace smoothing for discrete values.

Valid only for Naive Bayes models.

Defaults to 0.

- block_sizeint, optional

Specifies the number of data loaded per time during scoring.

0: load all data once

Other positive Values: the specified number

Valid only for RandomDecisionTree and HybridGradientBoostingTree model

Defaults to 0.

- missing_replacementstr, optional

Specifies the strategy for replacement of missing values in prediction data.

'feature_marginalized': marginalises each missing feature out independently

'instance_marginalized': marginalises all missing features in an instance as a whole corresponding to each category

Valid only when

imputeis False, and only for RandomDecisionTree and HybridGradientBoostingTree models.Defaults to 'feature_marginalized'.

- categorical_variablestr or list of str, optional

Specifies INTEGER column(s) that should be treated as categorical.

Other INTEGER columns will be treated as continuous.

No default value.

- top_k_attributionsint, optional

Specifies the number of features with highest attributions to output.

Defaults to 10.

- attribution_method{'no', 'saabas', 'tree-shap'}, optional

Specifies which method to use for tree-based model reasoning.

'no' : No reasoning.

'saabas' : Saabas method.

'tree-shap' : Tree SHAP method.

Valid only for tree-based models, i.e. DecisionTree, RandomDecisionTree and HybridGradientBoostingTree models. For such models, users should explicitly specify either 'saabas' or 'tree-shap' as the attribution method in order to activate SHAP explanations in the prediction result.

Defaults to 'tree-shap'.

- sample_sizeint, optional

Specifies the number of sampled combinations of features.

0 : Heuristically determined by algorithm.

Others : The specified sample size.

Valid only for Naive Bayes, Support Vector Machine, Multilayer Perceptron and Multi-class Logistic Regression models.

Defaults to 0.

Note

top_k_attributions,attribution_methodandsample_sizeare core parameters for local interpretability of models in hana_ml.algorithms.pal package, please see Local Interpretability of Models for more details.- random_stateint, optional

Specifies the seed for random number generator when sampling the combination of features.

0 : User current time as seed.

Others : The actual seed.

Valid only for Naive Bayes, Support Vector Machine, Multilayer Perceptron and Multi-class Logistic Regression models.

Defaults to 0.

- imputebool, optional

Specifies whether or not to handle missing values in the data for prediction.

Defaults to False.

- strategy, strategy_by_col, als_*parameters for missing value handling, optional

All these parameters mentioned above are for handling missing values in data, please see Parameters for Missing Value Handling in HANA DataFrame for more details.

All parameters are valid only when

imputeis set as True.- ignore_unknown_categorybool, optional

Specifies whether or not to ignore unknown category value.

False : Report error if unknown category value is found.

True : Ignore unknown category value if there is any.

Valid only when the model for prediction is multi-class logistic regression.

Defaults to True.

- **kwargskeyword arguments

Additional keyword arguments w.r.t. different classification algorithms within UnifiedClassification.

- Returns:

- DataFrame 1

Prediction result.

- DataFrame 2 (optional)

Error message. Only valid if

massiveis True when initializing an 'UnifiedClassification' instance.

- score(data, key=None, features=None, label=None, group_key=None, group_params=None, model=None, thread_ratio=None, max_result_num=None, ntiles=None, class_map1=None, class_map0=None, alpha=None, block_size=None, missing_replacement=None, categorical_variable=None, top_k_attributions=None, attribution_method=None, sample_size=None, random_state=None, impute=False, strategy=None, strategy_by_col=None, als_factors=None, als_lambda=None, als_maxit=None, als_randomstate=None, als_exit_threshold=None, als_exit_interval=None, als_linsolver=None, als_cg_maxit=None, als_centering=None, als_scaling=None, ignore_unknown_category=None)

Users can use the score function to evaluate the model quality. In the Unified Classification, statistics and metrics are provided to show the model quality. Currently the following metrics are supported.

AUC and ROC

RECALL, PRECISION, F1-SCORE, SUPPORT

ACCURACY

KAPPA

MCC

CUMULATIVE GAINS

CULMULATIVE LIFT

LIFT

- Parameters:

- dataDataFrame

Data for scoring.

If self.pivoted is True, then

datamust be pivoted, indicating that it should be structured the same as the pivoted data used for training(exclusive of the last data partition column). In this case, the following parameters become ineffective:key,features,group_key.- keystr, optional

Name of the ID column.

Mandatory if

datais not indexed, or the index ofdatacontains multiple columns.Defaults to the single index column of

dataif not provided.- featuresListOfString or str, optional

Names of feature columns.

Defaults to all non-ID, non-label columns if not provided.

- labelstr, optional

Name of the label column.

Defaults to the last non-ID column if not provided.

- group_keystr, optional

The column of group_key. Data type can be INT or NVARCHAR/VARCHAR. If data type is INT, only parameters set in the group_params are valid.

This parameter is only valid when

massiveis set as True in class instance initialization.Defaults to the first column of data if the index columns of data is not provided. Otherwise, defaults to the first column of index columns.

- group_paramsdict, optional

If massive mode is activated (

massiveis True in class instance initialization), input data for classification shall be divided into different groups with different classification parameters applied. This parameter specifies the parameter values of the chosen classification algorithmfuncin score() w.r.t. different groups in a dict format, where keys corresponding togroup_keywhile values should be a dict for classification algorithm parameter value assignments.An example is as follows:

Valid only when

massiveis set as True in class instance initialization.Defaults to None.

- modelDataFrame

Fitted classification model.

Defaults to the self.model_.

- thread_ratiofloat, optional

Controls the proportion of available threads to use for prediction.

The value range is from 0 to 1, where 0 indicates a single thread, and 1 indicates up to all available threads.

Values between 0 and 1 will use that percentage of available threads.

Values outside this range tell PAL to heuristically determine the number of threads to use.

Defaults to the PAL's default value.

- max_result_numint, optional

Specifies the output number of prediction results.

- labelstr, optional

The setting of the parameter should be same with the one in train.

- ntilesint, optional

Used to control the population tiles in metrics output.

The value should be at least 1 and no larger than the row size of the input data

- class_map0str, optional

Specifies the label value which will be mapped to 0 in logistic regression.

Valid only for logistic regression models when label variable is of VARCHAR or NVARCHAR type.

No default value.

- class_map1str, optional

Specifies the label value which will be mapped to 1 in logistic regression.

Valid only for logistic regression models when label variable is of VARCHAR or NVARCHAR type.

No default value.

- alphafloat, optional

Specifies the value for laplace smoothing.

0: Disables Laplace smoothing.

Other positive values: Enables Laplace smoothing for discrete values.

Valid only for Naive Bayes models.

Defaults to 0.

- block_sizeint, optional

Specifies the number of data loaded per time during scoring.

0: load all data once.

Other positive Values: the specified number.

Valid only for RandomDecisionTree and HybridGradientBoostingTree models.

Defaults to 0.

- missing_replacementstr, optional

Specifies the strategy for replacement of missing values in prediction data.

'feature_marginalized': marginalises each missing feature out independently.

'instance_marginalized': marginalises all missing features in an instance as a whole corresponding to each category.

Valid only when

imputeis False, and only for RandomDecisionTree and HybridGradientBoostingTree models.Defaults to 'feature_marginalized'.

- categorical_variablestr or list of str, optional

Specifies INTEGER column(s) that should be treated as categorical.

Other INTEGER columns will be treated as continuous.

Valid only for logistic regression models.

No default value.

- top_k_attributionsint, optional

Specifies the number of features with highest attributions to output.

Defaults to 10.

- attribution_method{'no', 'saabas', 'tree-shap'}, optional

Specifies which method to use for tree-based model reasoning.

'no' : No reasoning.

'saabas' : Saabas method.

'tree-shap' : Tree SHAP method.

Valid only for tree-based models, i.e. DecisionTree, RandomDecisionTree and HybridGradientBoostingTree models. For such models, users should explicitly specify either 'saabas' or 'tree-shap' as the attribution method in order to activate SHAP explanations in the scoring result.

Defaults to 'tree-shap'.

- sample_sizeint, optional

Specifies the number of sampled combinations of features.

0 : Heuristically determined by algorithm.

Others : The specified sample size.

Valid only for Naive Bayes, Support Vector Machine, Multilayer Perceptron and Multi-class Logistic Regression models.

Defaults to 0.

Note

top_k_attributions,attribution_methodandsample_sizeare core parameters for local interpretability of models in hana_ml.algorithms.pal package, please see Local Interpretability of Models for more details.- random_stateint, optional

Specifies the seed for random number generator when sampling the combination of features.

0 : User current time as seed.

Others : The actual seed.

Valid only for Naive Bayes, Support Vector Machine, Multilayer Perceptron and Multi-class Logistic Regression models.

Defaults to 0.

- imputebool, optional

Specifies whether or not to handle missing values in the data for scoring.

Defaults to False.

- strategy, strategy_by_col, als_*parameters for missing value handling, optional

All these parameters mentioned above are for handling missing values in data, please see Parameters for Missing Value Handling in HANA DataFrame for more details.

All parameters are valid only when

imputeis set as True.- ignore_unknown_categorybool, optional

Specifies whether or not to ignore unknown category value.

False : Report error if unknown category value is found.

True : Ignore unknown category value if there is any.

Valid only when the model for scoring is multi-class logistic regression.

Defaults to True.

- Returns:

- A list of DataFrames

- Prediction result by ignoring the true labels of the input data,

structured the same as the result table of predict() function.

- Statistics

- Confusion matrix

- Metrics

- Error message (optional). Only valid if

massiveis True when initializing an 'UnifiedClassification' instance.

- Error message (optional). Only valid if

- build_report()

Build the model report.

Examples

>>> from hana_ml.visualizers.unified_report import UnifiedReport >>> hgc = UnifiedClassification('HybridGradientBoostingTree') >>> hgc.fit(data=diabetes_train, key= 'ID', label='CLASS', partition_method='stratified', partition_random_state=1, stratified_column='CLASS') >>> hgc.build_report() >>> UnifiedReport(hgc).display()

- create_amdp_class(amdp_name, training_dataset='', apply_dataset='', num_reason_features=3)

Create AMDP class file. Then build_amdp_class can be called to generate amdp class.

- Parameters:

- training_datasetstr, optional

Name of training dataset.

Defaults to ''.

- apply_datasetstr, optional

Name of apply dataset.

Defaults to ''.

- num_reason_featuresint, optional

The number of features that contribute to the classification decision the most. This reason code information is to be displayed during the prediction phase.

Defaults to 3.

- abap_class_mapping(value)

Mapping the abap class.

- add_amdp_item(template_key, value)

Add item.

- add_amdp_name(amdp_name)

Add AMDP name.

- add_amdp_template(template_name)

Add AMDP template

- build_amdp_class()

After add_item, generate amdp file from template.

- create_model_state(model=None, function=None, pal_funcname='PAL_UNIFIED_CLASSIFICATION', state_description=None, force=False)

Create PAL model state.

- Parameters:

- modelDataFrame, optional

Specify the model for AFL state.

Defaults to self.model_.

- functionstr, optional

Specify the function name of the classification algorithm..

Defaults to self.real_func

- pal_funcnameint or str, optional

PAL function name.

Defaults to 'PAL_UNIFIED_CLASSIFICATION'.

- state_descriptionstr, optional

Description of the state as model container.

Defaults to None.

- forcebool, optional

If True it will delete the existing state.

Defaults to False.

- property fit_hdbprocedure

Returns the generated hdbprocedure for fit.

- generate_html_report(filename)

Save model report as a html file.

- Parameters:

- filenamestr

Html file name.

- generate_notebook_iframe_report()

Render model report as a notebook iframe.

- get_amdp_notfillin_key()

Get AMDP not fillin keys.

- load_abap_class_mapping()

Load ABAP class mapping.

- load_amdp_template(template_name)

Load AMDP template

- property predict_hdbprocedure

Returns the generated hdbprocedure for predict.

- set_framework_version(framework_version)

Switch v1/v2 version of report.

- Parameters:

- framework_version{'v2', 'v1'}, optional

v2: using report builder framework. v1: using pure html template.

Defaults to 'v2'.

- set_metric_samplings(roc_sampling: Sampling = None, other_samplings: dict = None)

Set metric samplings to report builder.

- Parameters:

- roc_sampling

Sampling, optional ROC sampling.

- other_samplingsdict, optional

Key is column name of metric table.

CUMGAINS

RANDOM_CUMGAINS

PERF_CUMGAINS

LIFT

RANDOM_LIFT

PERF_LIFT

CUMLIFT

RANDOM_CUMLIFT

PERF_CUMLIFT

Value is sampling.

- roc_sampling

Examples

Creating the metric samplings:

>>> roc_sampling = Sampling(method='every_nth', interval=2)

>>> other_samplings = dict(CUMGAINS=Sampling(method='every_nth', interval=2), LIFT=Sampling(method='every_nth', interval=2), CUMLIFT=Sampling(method='every_nth', interval=2)) >>> model.set_metric_samplings(roc_sampling, other_samplings)

- set_shapley_explainer_of_predict_phase(shapley_explainer, display_force_plot=True)

Use the reason code generated during the prediction phase to build a ShapleyExplainer instance.

When this instance is passed in, the execution results of this instance will be included in the report of v2 version.

- Parameters:

- shapley_explainer

ShapleyExplainer A ShapleyExplainer instance.

- display_force_plotbool, optional

Whether to display the force plot.

Defaults to True.

- shapley_explainer

- set_shapley_explainer_of_score_phase(shapley_explainer, display_force_plot=True)

Use the reason code generated during the scoring phase to build a ShapleyExplainer instance.

When this instance is passed in, the execution results of this instance will be included in the report of v2 version.

- Parameters:

- shapley_explainer

ShapleyExplainer A ShapleyExplainer instance.

- display_force_plotbool, optional

Whether to display the force plot.

Defaults to True.

- shapley_explainer

- write_amdp_file(filepath=None, version=1, outdir='out')

Write template to file.

- set_model_state(state)

Set the model state by state information.

- Parameters:

- state: DataFrame or dict

If state is DataFrame, it has the following structure:

NAME: VARCHAR(100), it mush have STATE_ID, HINT, HOST and PORT.

VALUE: VARCHAR(1000), the values according to NAME.

If state is dict, the key must have STATE_ID, HINT, HOST and PORT.

- delete_model_state(state=None)

Delete PAL model state.

- Parameters:

- stateDataFrame, optional

Specified the state.

Defaults to self.state.

Inherited Methods from PALBase

Besides those methods mentioned above, the UnifiedClassification class also inherits methods from PALBase class, please refer to PAL Base for more details.